Wrap-Up

First, congratulations to the top five teams, who all finished before hints were released on Monday: have you tried random anagramming; Tasty Samoas, Please Ingest; Killer Chicken Bones; Plugholate chip cookies; and Just for the Halibut. In total, 45 teams finished the hunt (with 4 more in endgame when it concluded)! Over 550 teams were registered, almost 450 of them solved a puzzle, and about 200 finished the intro round by solving Consolation Prize.

The rest of this page will be a recap of the process of making this hunt, followed by some fun stats, so as a warning, there will be lots of puzzle spoilers. It’s also very long, so feel free to just skip to the fun stuff!

Plot Summary

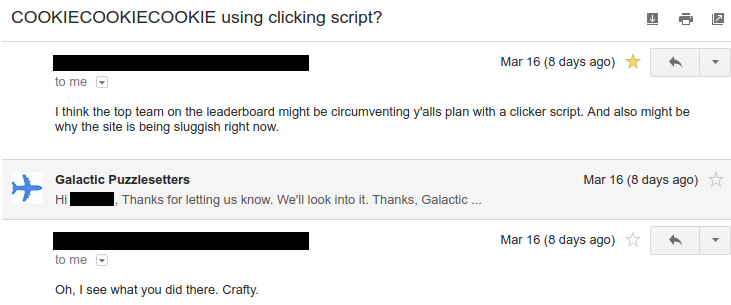

At the start of the hunt, all teams began an intense cookie clicking competition in a race to a billion cookies! Solving puzzles gave teams an ever-increasing number of cookies which would, in turn, unlock more puzzles. However, it soon became clear that something was amiss—a strange team, COOKIECOOKIECOOKIE, was gaining cookies at an alarmingly fast rate! Eventually, this mysterious team, with only the team member “me”, would win with a billion cookies, while every other team had far fewer cookies.

A few teams noticed ahead of time.

Each team saw a customized view of COOKIECOOKIECOOKIE’s progress on their leaderboard, which scaled with their own puzzle solves. After fully unlocking the first round, they were sent a notification that COOKIECOOKIECOOKIE had won the hunt. However, shortly after, they learned that this team did not in fact solve any puzzles and instead got their cookies through somewhat questionable means. Teams were therefore tasked with investigating COOKIECOOKIECOOKIE by solving the puzzle they had conveniently left behind: Consolation Prize, the first round meta (the specific emails we sent to teams are in the Story tab).

Solving the meta revealed that COOKIECOOKIECOOKIE was in fact Cookie Monster, who had his own nefarious plans to take over the entire galaxy’s cookie supplies. This led to the second part of the hunt, where teams collected three other cookie types: Chessmen, Fortune Cookies, and Animal Crackers, each with a round of eight puzzles and one metapuzzle. By solving each meta, teams received three tools: an ANDROID, a BEST-SELLING CRIME NOVEL, and a CALL SIGN.

However, these tools were unusable without The Great Galactic Oven, which had mysteriously disappeared. After finishing the three metas, teams followed the trail to the Great Galactic Bakery (the start of the metameta), where they learned that the Cookie Monster had stolen the Oven! They then had to use the three cookie meta answers to determine the Cookie Monster’s responses to the website’s three security questions, and then edit a suspicious-looking browser cookie in order to log-in as the Cookie Monster.

Once teams broke into Cookie Monster’s account, they were tasked with getting rid of all of his cookies using the three tools, now powered by the Oven. Each tool was capable of performing a specific manipulation on the number values of the four cookie types. Teams that completed the hunt were thanked for saving the galaxy from the Cookie Monster, and were sent a certificate in lieu of the one billion cookies, which had sadly been appropriated for use in restoring the cookie economy.

Dear <teamname>,

You did it! You brought the Cookie Monster down to 0 cookies, and saved us from the cookie apocalypse. Congratulations on finishing the hunt!

Unfortunately, all of our cookies are being used to help rebuild the cookie economy in the aftermath of the Cookie Monster’s rampage, so we are unable to award you the prize of 1 billion cookies.

Instead, we hope this certificate will serve as an adequate replacement!

Best, Galactic Puzzlesetters

Writing the Hunt

Goals

Some of our goals for this year were:

- Better route easy puzzles to less experienced teams, and make the hunt more satisfying for people of all skill levels.

- More tightly integrate the theme into the puzzles and hunt structure.

- Do something clever with the scoreboard :)

- Develop a more organized system of writing, test-solving, and validating puzzles.

Theme and Structure

We had planned on starting work on the hunt around October/November, which we thought would give us plenty of time to get the hunt to a polished state. We started seriously discussing and voting on the theme towards the end of September.

When thinking about the overall theme, we tried to consider combinations of story, structure, and a final metapuzzle that strongly complemented one another. The Cookie Clicker theme was suggested early on and many people were excited about it, so we decided to pursue it after fleshing out the structure of the metapuzzles.

For the structure, we had the idea for the final hacking puzzle and “clickaround” relatively early on, although the details of the puzzle were revised a few times. We decided fairly early that we wanted to have an intro round as well as around the 40 puzzles we ended up making for the hunt this year, and called for round metapuzzle ideas in November, finalizing them in December. The metapuzzles were made mostly independently, so it was sort of a happy coincidence that we ended up with 8 puzzles in each round, despite the meta answers all having distinct lengths.

Other parts of the structure were finalized much later. We waited until most of the puzzles had been written before deciding on the unlock structure and puzzle order, and the precise details of the story were modified multiple times. We ended up putting the most difficult/long puzzles (o ea / Wrd Srch, Destructive Interference, Adventure, Third Rail, Pride and Accomplishment, USPC) in the early/middle parts of each round because we thought a) Teams are more likely to encounter them when their brains are still sharp, b) Putting them later in the round would make them much more likely to get backsolved, and c) Getting a few easier puzzles near the end of the hunt might feel relaxing and rewarding after getting through some tough puzzles. The one exception was Cryptic Command, which was placed late in the round to separate it from the other cryptic, Earth-shattering.

The hint system was also finalized very late. We didn’t want to use yes/no questions again, as we found last year that they weren’t nearly as helpful as we thought, so we considered either having canned hints or freeform hints (or some combination, like selecting from a dropdown of possible hints). We went with freeform hints as we thought those would be the most helpful overall, though we put a strict cap on the number, as we got really burned out by the “unlimited hints” from last year.

Puzzle Writing and Testsolving

This year we used puzzletron to organize the puzzle-writing, editing, testsolving, and factchecking process. This proved fairly effective and was helpful when managing testsolving groups and monitoring the status of all the puzzles in our hunt, especially compared to the Google Sheets-based approach we used last year. Each puzzle was assigned an editor to review the idea and contribute feedback at each stage of writing and revision, although in most cases additional revision was done more informally.

We did most of our puzzle writing after Mystery Hunt in late January and early February and set internal deadlines for various puzzle milestones. A lot of our testsolving was done impromptu, but later on we organized various internal testsolving sessions to refine our unfinalized puzzles.

We tried running a “full-hunt testsolve” the weekend before the hunt, but we didn’t end up getting enough testsolvers to make it through the whole hunt. This meant that the end-game of editing your cookie to spoof as the Cookie Monster was untested going into the hunt, and to compensate we tried to make the flavortext clue browser cookies (and the security questions) as clearly as possible.

Organization

Once again, we used a Discord server as a primary means of communication and organization. We had numerous meetings early on to discuss the theme and structure, and meetings to finalize hunt details.

As the hunt began, we had dedicated alert channels for team registrations, email reply discussion, hint requests and discussion, and answer submissions. (Submissions for The Answer to This Puzzle Is… were given a channel of their own.)

Website

The vast majority of our website was built using Django. We were able to reuse various parts of last year’s hunt code, although this year’s theme involved a lot of additional development work. The cookie clickers required extra considerations to handle the scale we were expecting. The backend was built using a Go websocket server with Redis to handle storage of the cookie state; the frontend was built with Vue.js.

Several teams have asked us for the source code for our site so that they can build a hunt of their own. We are still discussing whether and how we would want to do this. However, if you have questions about how we accomplished something on the tech side, feel free to email us and we’ll do our best to answer.

Reflections on the Hunt

Timing

We had some trouble predicting how long the hunt would take. We’d hoped that at least a few teams would finish by Sunday night, before hints were released on Monday, but for all we knew, 10 teams could have finished on Saturday; we ran a prediction contest, and guesses for the first finish ranged from Saturday evening to Monday afternoon. We were a little worried when many teams ran into all of the hard/long puzzles on Saturday, but solvers gradually made their way through the gauntlet and 5 teams ended up finishing Sunday evening, which we were very happy (and relieved) about.

Some teams remarked that they weren’t expecting the hunt to take so long and wished that they would have known what they were getting into beforehand. While we also weren’t sure how long it would take, we could have made it clearer in the FAQs that the second half of the hunt would be quite hard.

General Format

In general, teams seemed to prefer this year’s progress-based format. Many teams liked the fact that the most competitive portion of the hunt was on a weekend, as it’s much easier to block out a weekend to do puzzles as opposed to finding time every day for a week. Teams also very much liked how they could solve at their own pace. However, a considerable number of teams said that they preferred the more relaxed pace of last year’s time-based (“Australian”) format, and also liked how you would be guaranteed new puzzles to work on each day, as opposed to potentially getting roadblocked on the harder puzzles in this format. In the future, we’ll continue to experiment with the format and try to address the issues brought up in feedback.

Virtually every team that commented on the “intro round” of 12 easier puzzles thought it was a good idea, as it provided a warm-up for the later puzzles and also served as a milestone that yielded a sense of pride and accomplishment.

One small thing we wish we had done was to start the hunt a few hours later (perhaps at 6:28 PM) to better accommodate work schedules for American solvers.

Unlock Structure

We had a lot of difficulty deciding how many puzzles would be open to teams: too few and you might have bottlenecks, too many and you can easily feel overwhelmed. We eventually came up with the unlock numbers you saw in the hunt, which meant that an average of ~5 puzzles were open at any time in the first round of 12 puzzles, and ~7 puzzles were open among the later rounds. These numbers worked well for top teams, but many others thought they felt too bottlenecked immediately after the first round (and no team said that they were overwhelmed with the number of open puzzles), so we think 8-9 puzzles open at a time may have provided a better experience overall.

Because our three metas were fairly backsolvable, we intentionally required you to solve at least 6 out of 8 of the puzzles in the round before the meta could be unlocked. We also intentionally positioned some of the easier puzzles towards the end the round so that teams couldn’t simply backsolve all of our hardest puzzles. This did lead to some frustration, but we still think it was the right decision. However, it might have boosted teams’ morales to let them know that they could expect easier puzzles later on.

We recognized that some teams would inevitably get stuck in our very difficult cookie rounds, so we also auto-unlocked more and more of the hunt over the course of the week so that every team would get a chance to see every puzzle. The reaction to this auto-unlocking was more mixed than we expected: several teams felt that they “lost their progress” when the auto-unlocking caught up to them, or that the large number of puzzles available at the end was too overwhelming. We do want teams to be able see the whole hunt if they want, but we’ll keep this feedback in mind for the future.

Difficulty/Puzzle Selection

This hunt had several very difficult and/or long puzzles. These have generally been the most polarizing in terms of feedback, partially because some were unnecessarily long or difficult, but also because spending time on a puzzle without solving it generally doesn’t feel very good. On the other hand, the difficult/long puzzles are often the most memorable and/or fun moments from a hunt if they do get solved, so there is a balance that needs to be made here (e.g. although some teams thought Adventure was too difficult or technical, many others saw it as one of the highlights of the hunt). We felt that we had a pretty good balance overall, though some of the harder puzzles could certainly have used a few tweaks.

We also think we had a good variety in puzzles, from wordplay/crosswords to logic puzzles to more research-oriented puzzles. Everyone has different preferences and dislikes, so we hope that all solvers were able to find a good number of puzzles that clicked with them.

Hint System

Many teams preferred this year’s personalized, freeform hints to last year’s Y/N hints. We did get a few requests from teams who wanted more than two hints per day, but anything much more than the volume we experienced this year would be prohibitively time-consuming for us. We responded to 1646 hint requests in total, which is a comparable number to last year, but it didn’t feel nearly as overwhelming because it was spread out more evenly throughout the week.

Some teams mentioned in the feedback that our hints could be overly cryptic at times. We tried to strike a balance between not giving away exactly what to do on a puzzle and still providing a useful nudge forward, though there was certainly variation in individual hints. We apologize if you received a particularly unhelpful hint, but we hope that the hint system was beneficial overall.

In the future, we’ll consider further modifications to this system, such as potentially incorporating canned hints, or somehow using larger hints to help teams that feel bottlenecked.

Organization

We thought that our system of assigning “puzzle editors” to review and contribute to ideas worked well, and contributed to the (we hope) higher puzzle quality this year. However, there were a few puzzles that did not get the full testsolving treatment, including Cryptic Command and Pride and Accomplishment as mentioned above, mostly due to the fact that they required multiple revisions and each testsolve required multiple people due to their difficulty.

We ended up revising puzzles as late as the week before the hunt. This was due to a combination of various factors including running out of unspoiled testsolvers—particularly for puzzles that had already been through multiple rounds of testsolving—and a lack of enforcement for puzzle-writing deadlines. We’re thinking of ways to improve our organizational structure next year, but we will also likely scale down the hunt a bit (or start preparing much earlier in the year), as a lot of us felt too overworked despite having about a month more time than last year.

Fun Stuff

Here’s some fun items we created and compiled over the course of the hunt:

- A list of most-common wrong answer submissions, as well as some submissions we liked

- A playlist of questionably-related songs

- A compilation of fun stories / things solvers sent us

- A bonus puzzle that we didn’t end up using

Statistics

During and after the hunt, we put together several different things to visualize or better understand how teams were doing. Here are some of them:

- A table of first solve and clickaround statistics

- A spreadsheet with puzzle statistics on forward solves vs. backsolves as well as solves with hints vs. no hints, and rankings of teams by first to N puzzles solved

- Big Board (the page we used to monitor team’s global progress)

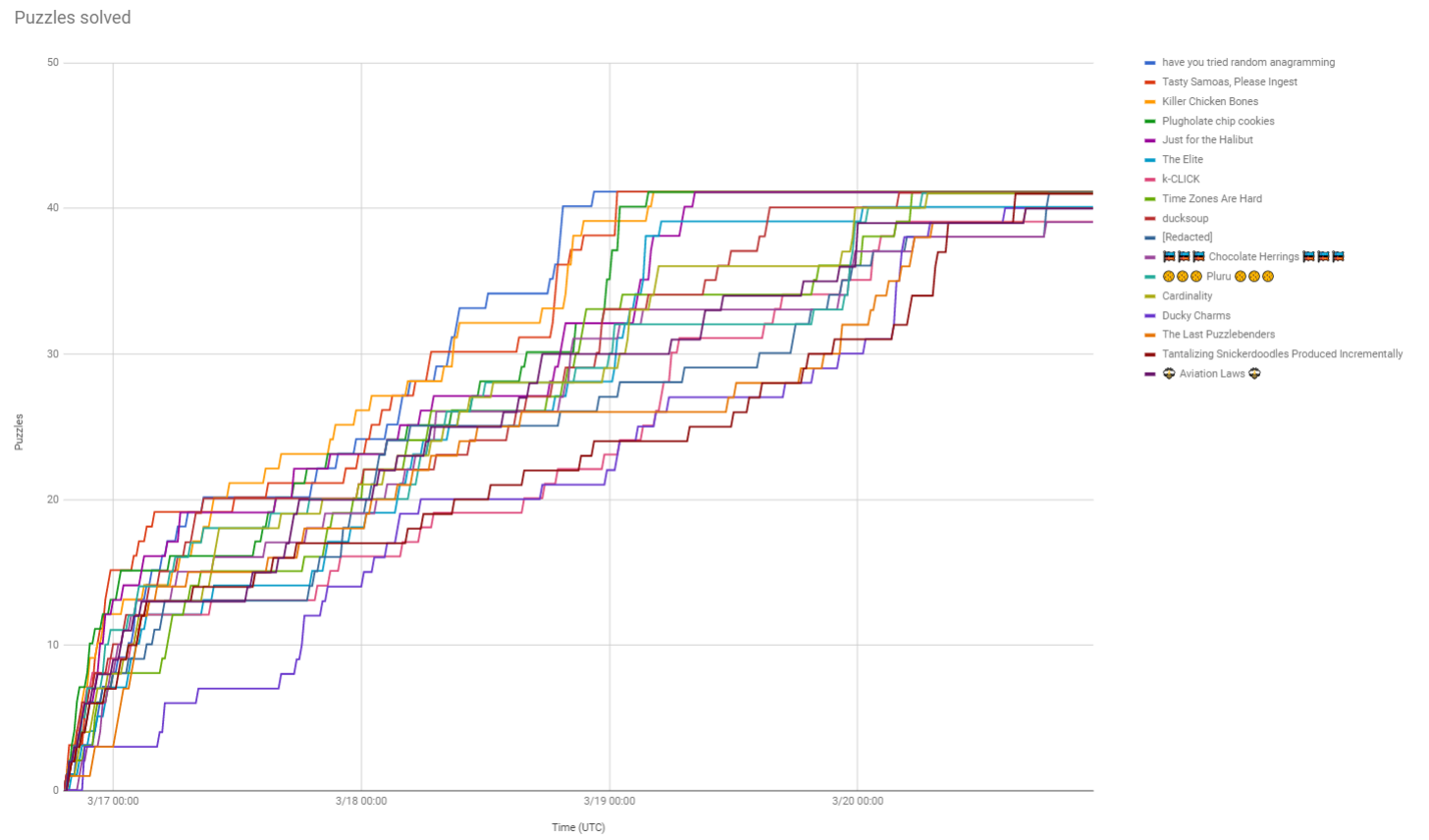

- Progress charts for the 45 teams who finished the hunt

- A complete guess log for the entire hunt (if you come up with any interesting statistics, please share them with us!)

- Extended stats and commentary for The Answer to This Puzzle Is..., with a link to a spreadsheet for exploring the data

- A general stats page with information on puzzle solves and hints used

Finally, here are some fun one-off statistics:

- 447 teams solved at least one puzzle.

- 203 teams solved the Consolation Prize.

- 45 teams finished the hunt.

- Cookie Monster’s account received just over 350 password reset requests.

- Team ducksoup clicked the most cookies, with 2,888,776.

- Portugal. The man. The plan. The canal. finished the hunt having earned the fewest cookies by clicking, with only 1404.

- Over the course of the hunt, a total of 17,054,912 cookies were made by clicking.

- There were 6,495 solves over the duration of the hunt, and 38,508 guesses made.

- Try and Eat was the most solved puzzle, with 420 solves.

- The fastest meta solve came from Snickerdoodles, who solved the Animal Crackers meta within 5:06 of unlock (we knew they already had the mechanic figured out, as they were trying to backsolve answers before unlocking the meta!)

Solves over time for the top teams.

Best Solutions

Some of our puzzles this year, particularly Adventure and Make Your Own Fillomino, have a great many possible approaches. If you have a particularly interesting or efficient solution to one of these puzzles, we’d love to hear about it! Visit the solution pages for those puzzles for more details.

Credits

Editors: Colin Lu, Jon Schneider, Rahul Sridhar, Anderson Wang, and Ben Yang

Puzzle Authors: Josh Alman, Danny Bulmash, Abby Caron, Lewis Chen, Brian Chen, Alan Huang, Lennart Jansson, Chris Jones, Ivan Koswara, Mitchell Lee, Colin Lu, Seth Mulhall, Max Murin, Nathan Pinsker, Jon Schneider, Rahul Sridhar, Charles Tam, Robert Tunney, Anderson Wang, Patrick Xia, Ben Yang, Yannick Yao, and Lucy Zhang

Web Developers: Mitchell Gu, Alan Huang, Lennart Jansson, Chris Jones, Nathan Pinsker, Jon Schneider, Nick Wu, and Leon Zhou

Additional Test Solvers and Fact Checkers: Phillip Ai, Nick Baxter, Lillian Chin, Neil Fitzgerald, Andrew Hauge, DD Liu, Ian Osborn, Nick Wu, Patrick Yang, and Leon Zhou

Special Thanks:

Julien Thiennot, developer of Cookie Clicker

The Cookie Monster

Puzzlers like you! Thank you all for participating in our hunt.